Exploring the Power and Limitations of GPT-3

Written on

Chapter 1: Introduction to GPT-3

GPT-3, or Generative Pre-trained Transformer 3, is a sophisticated language model developed by OpenAI, a leading AI research organization based in San Francisco. This model boasts an impressive 175 billion parameters, enabling it to generate text that closely resembles human writing. It has been trained on extensive datasets, encompassing hundreds of billions of words.

David Chalmers once remarked, “I am open to the idea that a worm with 302 neurons is conscious, so I am open to the idea that GPT-3 with 175 billion parameters is conscious too.” This statement captures the intrigue surrounding GPT-3, which has garnered significant media attention and sparked the creation of numerous startups leveraging its capabilities. However, it is crucial to comprehend the underlying mechanics of GPT-3 rather than view it merely as a magical solution to all problems.

In this article, I will provide a comprehensive overview of GPT-3, detailing its functionality, strengths, and limitations, as well as guidance on how to utilize this powerful tool.

Chapter 2: The Mechanics of GPT-3

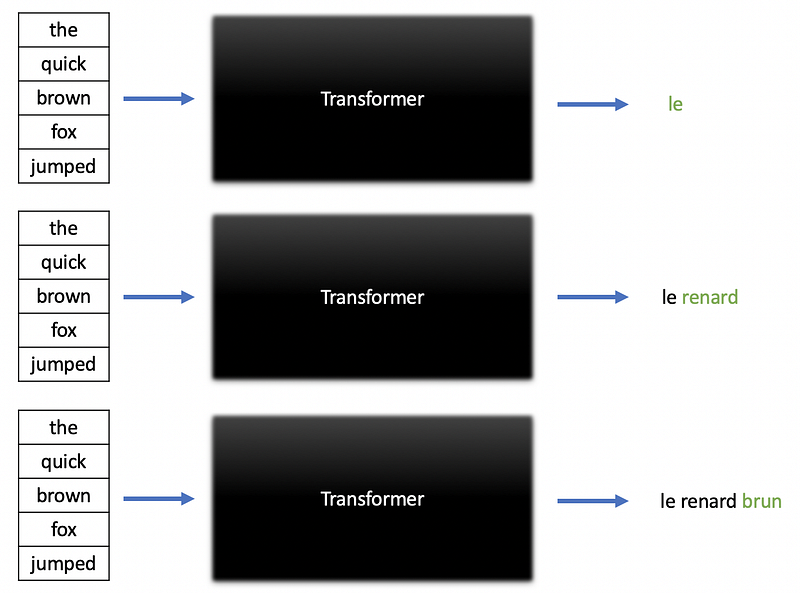

At its essence, GPT-3 operates as a transformer model. These transformer models are designed for sequence-to-sequence tasks, allowing them to generate text based on an input sequence. They excel in various text-related tasks, including question answering, summarization, and translation.

The image above illustrates how a transformer model progressively generates a translation in French from an English input.

Unlike LSTMs, transformer models employ multiple units known as attention blocks to determine which segments of a text sequence warrant focus. A single transformer may feature numerous attention blocks, each specializing in different linguistic elements, from parts of speech to named entities. For a deeper dive into transformers, refer to my previous article on the subject.

Section 2.1: The Scale of GPT-3

GPT-3 represents the third iteration of OpenAI's GPT series. Its standout feature is its size: with 175 billion parameters, it is 17 times larger than GPT-2 and approximately 10 times the size of Microsoft's Turing NLG model. Following the transformer architecture mentioned earlier, GPT-3 consists of 96 attention blocks, each containing 96 attention heads, essentially making it a colossal transformer.

The foundational research paper indicates that GPT-3 was trained using a blend of substantial text datasets, including:

- Common Crawl

- WebText2

- Books1

- Books2

- Wikipedia Corpus

This dataset incorporated a vast array of web pages, a massive collection of books, and the entirety of Wikipedia, enabling GPT-3 to generate text in English and several other languages.

Chapter 3: Why GPT-3 is Remarkable

Since its introduction, GPT-3 has made waves due to its versatility in performing a multitude of natural language tasks, generating text that closely mimics human writing. Its capabilities encompass a range of functions, including:

- Text classification (e.g., sentiment analysis)

- Question answering

- Text generation

- Text summarization

- Named-entity recognition

- Language translation

Given its extensive exposure to text, GPT-3 can execute reading comprehension and writing tasks at a level comparable to humans, albeit with far more reading experience. This unique attribute is what makes GPT-3 so powerful, leading to the establishment of numerous startups that harness its potential as a versatile tool for various language processing challenges.

Chapter 4: Understanding the Limitations of GPT-3

Despite being the largest and arguably the most formidable language model currently available, GPT-3 has inherent limitations. Each machine learning model, no matter how advanced, has its constraints. Below are some of GPT-3's notable limitations:

- Lack of Long-term Memory: Unlike humans, GPT-3 does not retain knowledge from prolonged interactions.

- Interpretability Issues: The complexity of GPT-3 makes it challenging to interpret or explain its outputs.

- Fixed Input Size: The transformer architecture has a predetermined maximum input size, restricting GPT-3 to prompts no longer than a few sentences.

- Slow Inference Times: Due to its substantial size, GPT-3 requires more time to generate predictions.

- Bias: The model's performance is only as good as the data it was trained on, which means it can exhibit biases, as highlighted in studies revealing anti-Muslim bias in large language models.

While GPT-3 demonstrates impressive capabilities, these limitations emphasize that it is far from being a flawless language model or a representation of artificial general intelligence (AGI).

Chapter 5: How to Access and Use GPT-3

Currently, GPT-3 is not open-source; OpenAI has opted to provide access via a commercial API. Interested users must complete the OpenAI API Waitlist Form to join the queue for access.

OpenAI also offers a specialized program for academic researchers aiming to utilize GPT-3. For those interested in academic inquiries, it is advisable to fill out the Academic Access Application.

Although GPT-3 is not open for public use, its predecessor, GPT-2, remains open-source and can be accessed through Hugging Face’s transformers library. For those seeking to experiment with a powerful yet smaller language model, the documentation for Hugging Face’s GPT-2 implementation is a valuable resource.

Chapter 6: Conclusion

GPT-3 has attracted significant attention due to its status as the largest and arguably most powerful language model to date. Nevertheless, it is essential to acknowledge the limitations that prevent it from being a perfect solution or an example of AGI. If you wish to explore GPT-3 for research or commercial applications, you can apply for OpenAI’s API, which is currently in private beta. Alternatively, GPT-2 offers a publicly available and open-source option for those looking to work with a robust language model.

Join My Mailing List

If you're eager to enhance your skills in data science and machine learning and stay informed about the latest trends, developments, and research in the field, consider joining my mailing list. Subscribers will receive updates on my data science content, as well as my free Step-By-Step Guide to Solving Machine Learning Problems upon signing up!

Sources:

- Brown, B. Mann, N. Ryder, et al., "Language Models are Few-Shot Learners," (2020), arXiv.org.

- Abid, M. Farooqi, and J. Zou, "Persistent Anti-Muslim Bias in Large Language Models," (2021), arXiv.org.

- Wikipedia, "Artificial general intelligence," (2021), Wikipedia the Free Encyclopedia.

- Brockman, M. Murati, P. Welinder and OpenAI, "OpenAI API," (2020), OpenAI Blog.

- Vaswani, N. Shazeer, et al., "Attention Is All You Need," (2017), 31st Conference on Neural Information Processing Systems.